Having used a feed reader for the better part of a year, I wanted to see if RSS could be augmented with a recommender system that could highlight the content I find more interesting. LSS is that solution, just through click-tracking it learns to predict what items you would read by their titles.

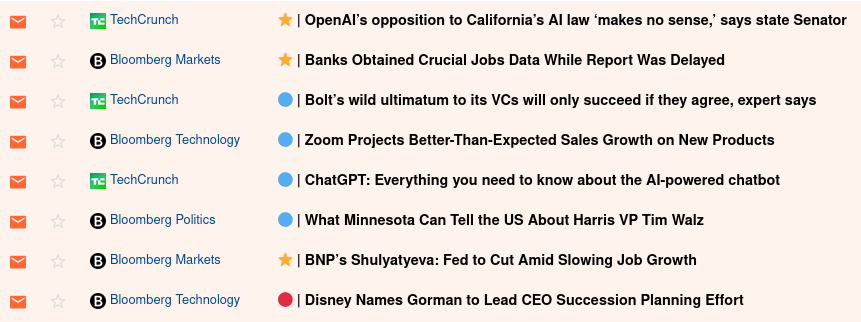

- See which RSS items you would read (⭐), might read (🔵), and

probably wouldn't read (🔴). The items you probably wouldn't read only

pass through the filter if you toggle

filter: falsein yourconfig.yml. - HTTP GET the URLs of all your filtered feeds at

/feeds, paths are auto generated to keep theconfig.ymlminimal. - Clicking an item's default link updates the title as average (🔵) in the database, and additional links for updating the item as either good (⭐) or poor (🔴) are added to the top of the item's description.

- Filter with an ensemble of classical TF-IDF regression and transformer-based DistilBERT classification.

- Toggle filtering for individual feeds or all feeds with the

filterandcold_startfields, respectively. - Set weights for each classifier, their weighted softmaxes are added up to classify items.

- Inference and most of the service runs inside the container, DistilBERT training runs on AWS Sagemaker and requires the AWS CLI to be configured on the host machine.

- Recommended to be run behind a reverse-proxy with authentication, default port is 5000.

- Copy over the

config.ymlandcrontabfromconfig/examplestoconfig, make any changes as you'd like. - If using DistilBERT, add an

iam_rolefield to the config that you'll have to create on AWS, also need to have an AWS config at~/.awsfor said IAM role. - Starting out, I recommend to set

cold_start: trueat the top of yourconfig.ymluntil you have enough data for the training cron jobs to work. - Run

docker compose up production -d

08/12/24 - Has labels for poor/average/good ratings that are associated with

red, blue, and star emojis in the generated feeds. TF-IDF classification and

DistilBERT had better F1 scores when they just did binary classification instead

of ternary, looking into a solution that is good for small datasets. Test

coverage needs improvement, alembic mostly unused so migrations are still done

manually, needs documentation for setup and probably an init script. Thinking of

including confidence scores in the generated feeds next to the emojis.

09/17/24 - Through passive use of the application I'm seeing better results i.e. getting over the cold-start hurdle. Here's a recent evaluation metric from the docker compose logs, note that initially the precision/recall scores were closer to that of a trivial random classifier (~0.33 for ternary classification):

lss-prod | {'eval_loss': 0.8556984066963196, 'eval_accuracy': 0.671875, 'eval_f1': 0.6756054421768708, 'eval_precision': 0.6959779006737331, 'eval_recall': 0.671875, 'eval_runtime':

│ 0.8686, 'eval_samples_per_second': 221.042, 'eval_steps_per_second': 3.454, 'epoch': 3.0}