-

Notifications

You must be signed in to change notification settings - Fork 1.7k

[Tooling] Enable U Test by default, add tooltip about repetition count. #617

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

✅ Build benchmark 1287 completed (commit a8263d98e1 by @LebedevRI) |

| if ((len(timings_time[0]) >= UTEST_MIN_REPETITIONS) and | ||

| (len(timings_time[1]) >= UTEST_MIN_REPETITIONS) and | ||

| (len(timings_cpu[0]) >= UTEST_MIN_REPETITIONS) and | ||

| (len(timings_cpu[1]) >= UTEST_MIN_REPETITIONS)): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

weird indent here.. is this correct?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is correct, but the indent is indeed wrong.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Funny, autopep8 actually insists on this weird indent.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

as long as it does the right thing :)

tools/gbench/report.py

Outdated

| ['BM_Two', '+0.1111', '-0.0111', '9', '10', '90', '89'], | ||

| ['BM_Two', '+0.2500', '+0.1125', '8', '10', '80', '89'], | ||

| ['U-test', '(p-value)', '0.2207', '0.6831'], | ||

| ['U-test', '(p-value)', '0.2207', '0.6831', 'Repetitions:', '2.', 'WARNING:', 'Results', 'unreliable!', '9+', 'repetitions', 'recommended.'], |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

a comment on output: should it have the bm name in the 'u-test' bit so people running the output through grep/ag/whatever will catch the u-test for the bm they want?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, good idea..

How do you want it spelled, _utest_pvalue ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

or just BM_Two_p_value ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I wanted to specify for which test the value is.

But i suppose i can do that next to the Repetitions: .

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ah, you expect we might have more than one test. that's fair. BM_Two_utest_pvalue then?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

But now that i have actually looked, having such a long suffix as _utest_pvalue, causes

the need to realign the columns (since the first column may now be wider).

So _utest_pvalue is better, but more troublesome.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So i have thought, and ok, let's just use _pvalue.

Avoids the headache with column width readjusting for now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Pushed the _pvalue variant.

As previously discussed, let's flip the switch ^^.

This exposes the problem that it will now be run

for everyone, even if one did not read the help

about the recommended repetition count.

This is not good. So i think we can do the smart thing:

```

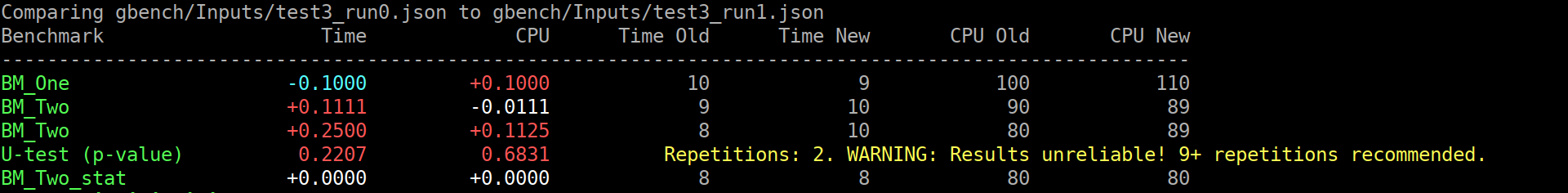

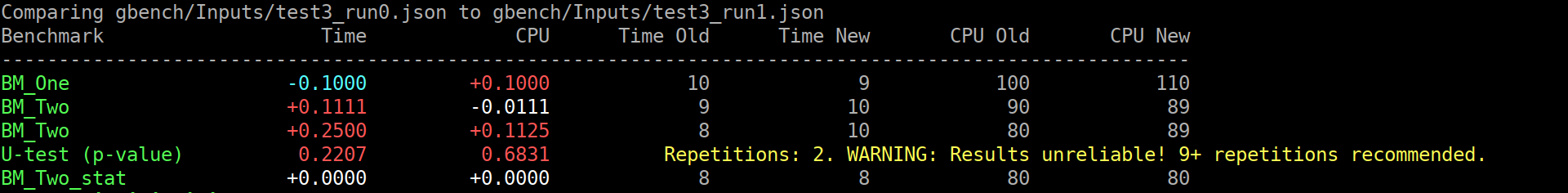

$ ./compare.py benchmarks gbench/Inputs/test3_run{0,1}.json

Comparing gbench/Inputs/test3_run0.json to gbench/Inputs/test3_run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

--------------------------------------------------------------------------------------------------------

BM_One -0.1000 +0.1000 10 9 100 110

BM_Two +0.1111 -0.0111 9 10 90 89

BM_Two +0.2500 +0.1125 8 10 80 89

BM_Two_pvalue 0.2207 0.6831 U Test, Repetitions: 2. WARNING: Results unreliable! 9+ repetitions recommended.

BM_Two_stat +0.0000 +0.0000 8 8 80 80

```

(old screenshot)

Or, in the good case (noise omitted):

```

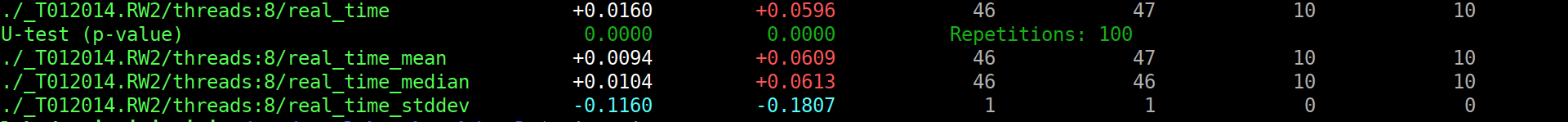

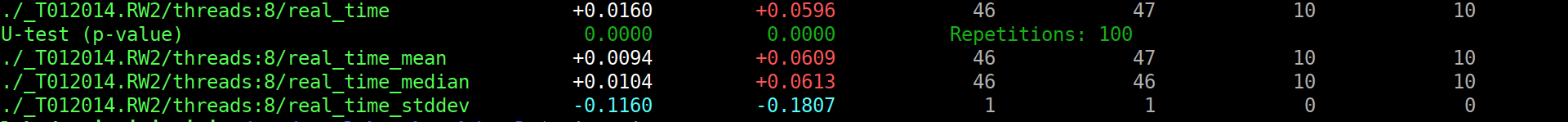

s$ ./compare.py benchmarks /tmp/run{0,1}.json

Comparing /tmp/run0.json to /tmp/run1.json

Benchmark Time CPU Time Old Time New CPU Old CPU New

---------------------------------------------------------------------------------------------------------------------------------

<99 more rows like this>

./_T012014.RW2/threads:8/real_time +0.0160 +0.0596 46 47 10 10

./_T012014.RW2/threads:8/real_time_pvalue 0.0000 0.0000 U Test, Repetitions: 100

./_T012014.RW2/threads:8/real_time_mean +0.0094 +0.0609 46 47 10 10

./_T012014.RW2/threads:8/real_time_median +0.0104 +0.0613 46 46 10 10

./_T012014.RW2/threads:8/real_time_stddev -0.1160 -0.1807 1 1 0 0

```

(old screenshot)

|

@dominichamon thank you! |

…t. (google#617) As previously discussed, let's flip the switch ^^. This exposes the problem that it will now be run for everyone, even if one did not read the help about the recommended repetition count. This is not good. So i think we can do the smart thing: ``` $ ./compare.py benchmarks gbench/Inputs/test3_run{0,1}.json Comparing gbench/Inputs/test3_run0.json to gbench/Inputs/test3_run1.json Benchmark Time CPU Time Old Time New CPU Old CPU New -------------------------------------------------------------------------------------------------------- BM_One -0.1000 +0.1000 10 9 100 110 BM_Two +0.1111 -0.0111 9 10 90 89 BM_Two +0.2500 +0.1125 8 10 80 89 BM_Two_pvalue 0.2207 0.6831 U Test, Repetitions: 2. WARNING: Results unreliable! 9+ repetitions recommended. BM_Two_stat +0.0000 +0.0000 8 8 80 80 ``` (old screenshot)  Or, in the good case (noise omitted): ``` s$ ./compare.py benchmarks /tmp/run{0,1}.json Comparing /tmp/run0.json to /tmp/run1.json Benchmark Time CPU Time Old Time New CPU Old CPU New --------------------------------------------------------------------------------------------------------------------------------- <99 more rows like this> ./_T012014.RW2/threads:8/real_time +0.0160 +0.0596 46 47 10 10 ./_T012014.RW2/threads:8/real_time_pvalue 0.0000 0.0000 U Test, Repetitions: 100 ./_T012014.RW2/threads:8/real_time_mean +0.0094 +0.0609 46 47 10 10 ./_T012014.RW2/threads:8/real_time_median +0.0104 +0.0613 46 46 10 10 ./_T012014.RW2/threads:8/real_time_stddev -0.1160 -0.1807 1 1 0 0 ``` (old screenshot)

[Tooling] Enable U Test by default, add tooltip about repetition count.

As previously discussed, let's flip the switch ^^.

This exposes the problem that it will now be run

for everyone, even if one did not read the help

about the recommended repetition count.

This is not good. So i think we can do the smart thing:

(old screenshot)

Or, in the good case (noise omitted):

(old screenshot)