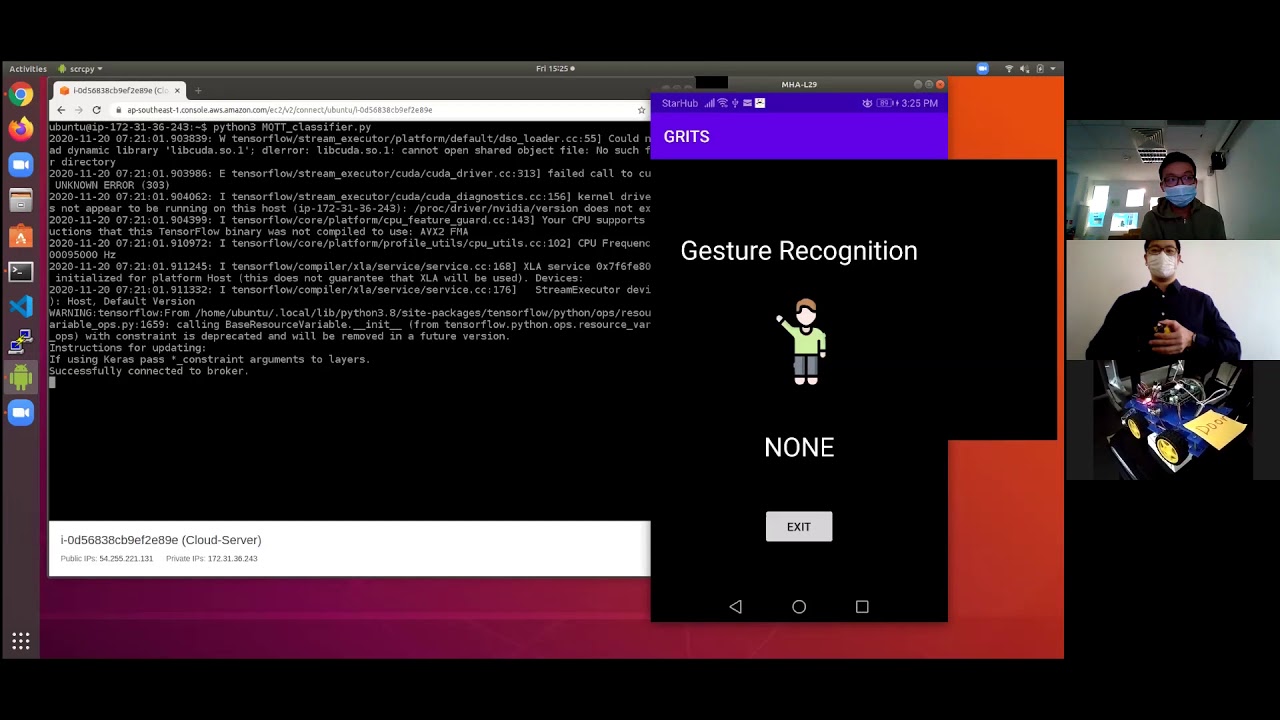

Gesture Recognition Integrated System

- Training_acc = 0.9933

- Test_acc = 0.96762

Download and watch 'CS3237 Demo.mp4' to see demonstration of project or watch it here:

Nothing, project completed!

This project runs on Linux and AWS Ubuntu 20.04.1 LTS T2.micro Server as the following dependencies do not work on Windows:

bluepy for TI CC2650 SensorTag,

paho-mqtt & mosquitto for MQTT.

A virtual environment is recommended to prevent clashes or overwriting of your main installation.

pip3 install requirements.txtsudo apt-get install mosquitto mosquitto-clients

pip3 install paho-mqtt

https://github.com/IanHarvey/bluepy

Follow the instructions for installation of library and bluepy package. To be used on the device acting as Gateway between SensorTag and Cloud.

The provided model is able to accurately classify 4 gestures as shown in the Demo: Buddha Clap, Pushback, Swipe, Knob right. Note that the data was trained using the right hand and the Sensor mounted in a specific orientation.

Gateway: 1. user_sensor.py for recording SensorTag and sending data

2. MQTT_gesture.py for monitoring Cloud output

Cloud: 1. MQTT_classifier.py

2. lstm_model_100_50

Change the IP address and MQTT authorization to your server in the above 3 python files, SensorTag BLE Address and my_sensor in main of user_sensor.py.

Unzip the ready_data.zip for our processed data.

or

- Run sensor.py within deprecated to record your own data, change Sensortag Address and filename.

- Combine your recorded data into a single .csv per gesture. Put them in raw_data.

- Edit window-mod.ipynb to fit your parameters, gestures and .csv files.

- Run the notebook window-mod.ipynb

- This processes the raw_data and generates output onto your local repo

- We have excluded them from our online repo as uncompressed files are too big (>600MB)

- ready_data folder

You can open the data via the text files, make sure to unroll to get original dimensions

Run lstm_csv.ipynb

This extracts the processed data and trains the model, saving it into lstm_models folder, showing the model performance and accuracy over the epochs. The model is then evaluated using the test data and outputs the incorrect classifications into a output.csv for further analysis by you.

Run the Test code to test any model

You can also change the model_name to test any other model again and get the output. Run the first 3 cells to import the test data if needed.

Just extra workbooks for debugging and processing steps

- plot.ipynb: For visualising the recorded raw data

- performance_figures: Performance difference between 100_50 and 50_25 (window_overlap) performance, and the error output of different models for analysis. Can be seen that Crank gesture causes a lot of misclassifications, hence our decision to drop it.

- android: Code base to develop and use the app shown in the Demo.

- esp32_mqtt_aws_direct: Code used to program the ESP32 for our Demo purposes, also as an example of how to connect ESP32 to AWS over MQTT with the correct baud rate and setup.

- helper-code: bluepy and miscelleanous code.